Description

Cheapfake is a recently coined term that encompasses non-AI ("cheap") manipulations of multimedia content. Cheapfakes are known to be more prevalent than deepfakes. Cheapfake media can be created using editing software for image/video manipulations, or even without using any software, by simply altering the context of an image/video by sharing the media alongside misleading claims. This alteration of context is referred to as out-of-context (OOC) misuse of media. OOC media is much harder to detect than fake media, since the images and videos are not tampered. In this challenge, we focus on detecting OOC images, and more specifically the misuse of real photographs with conflicting image captions in news items. The aim of this challenge is to develop and benchmark models that can be used to detect whether given samples (news image and associated captions) are OOC.

Task 1: Participants are asked to come up with methods to detect conflicting image-caption triplets, which indicate miscontextualization. More specifically, given

Task 2: Participants are asked to come up with methods to determine whether a given image-caption pair is genuine (real) or falsely generated (fake). More specifically, given an

Challenge Website

https://detecting-cheapfakes.github.io/

Organisers

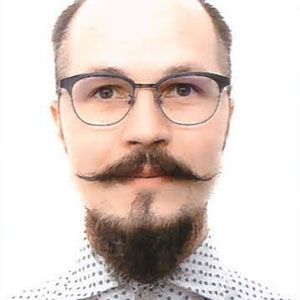

Duc-Tien Dang-Nguyen

University of Bergen & Kristiania Unveristy College

ductien.dangnguen@uib.no

Sohail Ahmed Khan

University of Bergen

sohail.khan@uib.no

Cise Midogly

Simula Metropolitan Center for Digital Engineering

cise@simula.no

Michael Riegler

Simula Metropolitan Center for Digital Engineering

micahel@simula.no

Pål Halvorsen

Simula Metropolitan Center for Digital Engineering

paalh@simula.no

Minh-Son Dao

National Institute of Information and Communications Technology

dao@nict.go.jp

Description

Video analysis research is important in autonomous driving as it can help with accident prediction. Dashcam videos can provide valuable information for this task. However, there is a lack of well-annotated, large-scale datasets for accident prediction in Asian countries. To address this, a large-scale vehicle collision dataset from Taiwan was collected and labeled for frame- and video-level predictions. The dataset aims to inspire participants from academia and industry to improve safety in autonomous driving. Two tracks of this challenge are vehicle accident prediction and vehicle accident type recognition.

Challenge Website

https://sites.google.com/view/tvcd-tw

Organisers

Assist.Prof. Chih-Chung Hsu

Institute of Data Science,

National Cheng Kung University

A/Prof. Li-Wei Kang

Department of Electrical Engineering, National Taiwan Normal University

Assist.Prof Chao-Yang Lee

Department of Computer Science and Information Enginering,

National Yunlin University of Science and Technology

Dr Ming-Ching Chang

Department of Computer Science,

College of Engineering and Applied Sciences,

University at Albany, State University of New York

Description

Tactical Troops: Anthracite Shift is a top-down, turn-based game that mixes tactical skills and the feeling of 80's sci-fi movies. Players can command a troop of soldiers to fight on one of 24 multi-player maps with artificial bots or human opponents. The goal of our challenge is to learn an efficient model for predicting chances that a player will score a frag in the next game turn (i.e., eliminate at least one troop of the opposing player) using screenshot data and basic information about the game state. A high-quality prediction model for this task will be used in a number of analytical tasks related to the game. It will also help us design better AI-controlled agents for future video games. The competition will launch on February 12.

Challenge Website

https://knowledgepit.ai/icme-2023-grand-challenge/

Organisers

Andrzej Jansz

QED Software

Rafał Tyl

Silver Bulleet Labs

Dominik Ślęzak

eSensei Sp. z o. o.

Description

Degraded multimedia data, especially real rain, pose serious challenges to scene understanding. Although existing computer vision technologies have achieved improved results under unreal synthetic conditions, especially for those with simple degradation factors, they often lose their ability on real-world rain scene images, in which the background content has suffered various complex degradations. In outdoor applications such as automatic driving and monitoring systems, in particular, the degradation factors on rainy days involve rain intensity, occlusion, blur, droplets, reflection, wiper, and their blending effects, making scene contents commonly not easy to understand. Consequently, these adverse degradation effects often lead to unsatisfactory performances of various vision tasks, such as scene understanding, object detection, and identification. There is an urgent need to deliver efforts to seek the optimized solution to meeting the actual needs of the people's livelihood. See the official challenge website for details.

Challenge Website

http://aim-nercms.whu.edu.cn/news/265.html

Organisers

Xian Zhong

School of Computer Science and Artifical Intelligence

Wuhan University of Technology

zhongx@whut.edu.on

Kui Jiang

Huawei Technologies

jiangkui5@huawei.com

Zheng Wang

Shool of Computer Science

Wuhan University

wangzwhu@whu.edu.on

Chih-Chung Hsu

Department of Electrical Engineering

Institute of Data Science, National Cheng Kung University

cchsu@gs.ncku.edu.tw

Chia-Wen Lin

Department of Electrical Engineering

National Tsing Hua University

cwlin@ee.nthu.edu.tw

Description

Object detection in the computer vision area has been extensively studied and making tremendous progress in recent years. Furthermore, image segmentation takes it to a new level by trying to find out accurately the exact boundary of the objects in the image. Semantic segmentation is in pursuit of more than just location of an object, going down to pixel level information. However, due to the heavy computation required in most deep learning-based algorithms, it is hard to run these models on embedded systems, which have limited computing capabilities. In addition, the existing open datasets for traffic scenes applied in ADAS applications usually include main lane, adjacent lanes, different lane marks (i.e. double line, single line, and dashed line) in western countries, which is not quite similar to that in Asian countries like Taiwan with lots of motorcycle riders speeding on city roads, such that the semantic segmentation models training by only using the existing open datasets will require extra technique for segmenting complex scenes in Asian countries. Often time, for most of the complicated applications, we are dealing with both object detection and segmentation task. We will have difficulties when accomplish these two tasks in separated models on limited-resources platform.

The goal is to design a lightweight single deep learning model to support multi-task functions, including semantic segmentation and object detection, which is suitable for constrained embedded system design to deal with traffic scenes in Asian countries like Taiwan. We focus on segmentation/object detection accuracy, power consumption, real-time performance optimization and the deployment on MediaTek’s Dimensity Series platform. With MediaTek’s Dimensity Series platform and its heterogeneous computing capabilities such as CPUs, GPUs and APUs (AI processing units) embedded into the system-on-chip products, developers are provided the high performance and power efficiency for building the AI features and applications. Developers can target these specific processing units within the system-on-chip or, they can also let MediaTek NeuroPilot SDK intelligently handle the processing allocation for them. For more description on the contest, please refer to the ICME2023 GC PAIR competition website.

Challenge Website

https://pairlabs.ai/ieee-icme-2023-grand-challenges/

Organisers

Dr. Po-Chi Hu

pochihu@nycu.edu.tw

Prof. Ted Kuo

tedkuo@nycu.edu.tw

Prof. Jeng-Neng Hwang

hwang@uw.edu

Prof. Jiun-In Guo

jiguo@nycu.edu.two

Dr. Marvin Chen

marvin.chen@mediatek.com

Dr. Hsien-Kai Kuo

hsienkai.kuo@mediatek.com

Prof. Chia-chi Tsai

cctsai@gs.ncku.edu.tw

IEEE ICME 2023 is inviting proposals for its Grand Challenge (GC) program. Please see the following guidelines for this year’s grand challenge submission:

Grand Challenge Proposals should follow the requirements below:

Please submit the proposals in PDF format to the relevant Grand Challenge chairs

Grand Challenges proposal submission deadline 15-Dec-22, All submissions are due 11:59PM Pacific time.

Adeia Inc., USA

ningxu01@gmail.com

Plymouth University, UK

l.sun@plymouth.ac.uk

OPPO, USA

vladyslav.zakharchenko@gmail.com

Peking University, China

liujiaying@pku.edu.cn